Synthetic media, fueled by the latest boom of GenAI models, holds immense potential for creativity, storytelling, and innovation. However, its application also raises critical questions about transparency, ethics, and regulation. A balanced, nuanced framework is essential to distinguish between creative uses and potentially harmful applications and thus a need for regulation. This post unpacks the context, key roles, regulatory needs, and mechanisms for disclosure, offering a roadmap to approach synthetic media responsibly. This blog posts builds mainly on the prior work, especially the [Synthetic Media Framework](https://syntheticmedia.partnershiponai.org/) from Partnership of AI.

1. Context and Relevance

Not all synthetic media demands scrutiny or regulation. Many instances, such as artistic or surreal creations, don’t pose harm.

However, regulation becomes critical when synthetic media is employed maliciously, especially to mislead, harm, or deceive.

Key scenarios where synthetic media should be clearly marked:

Misrepresentation of individuals or entities: Deepfakes or synthetic portrayals that inaccurately depict real people, organizations, or events.

Creation of photorealistic personas: Fabricated representations of people engaging in actions, behaviors, or making statements they never did.

Manipulation of authentic media: Altering genuine content by adding or removing elements or generating fully synthetic scenes intended to deceive audiences.

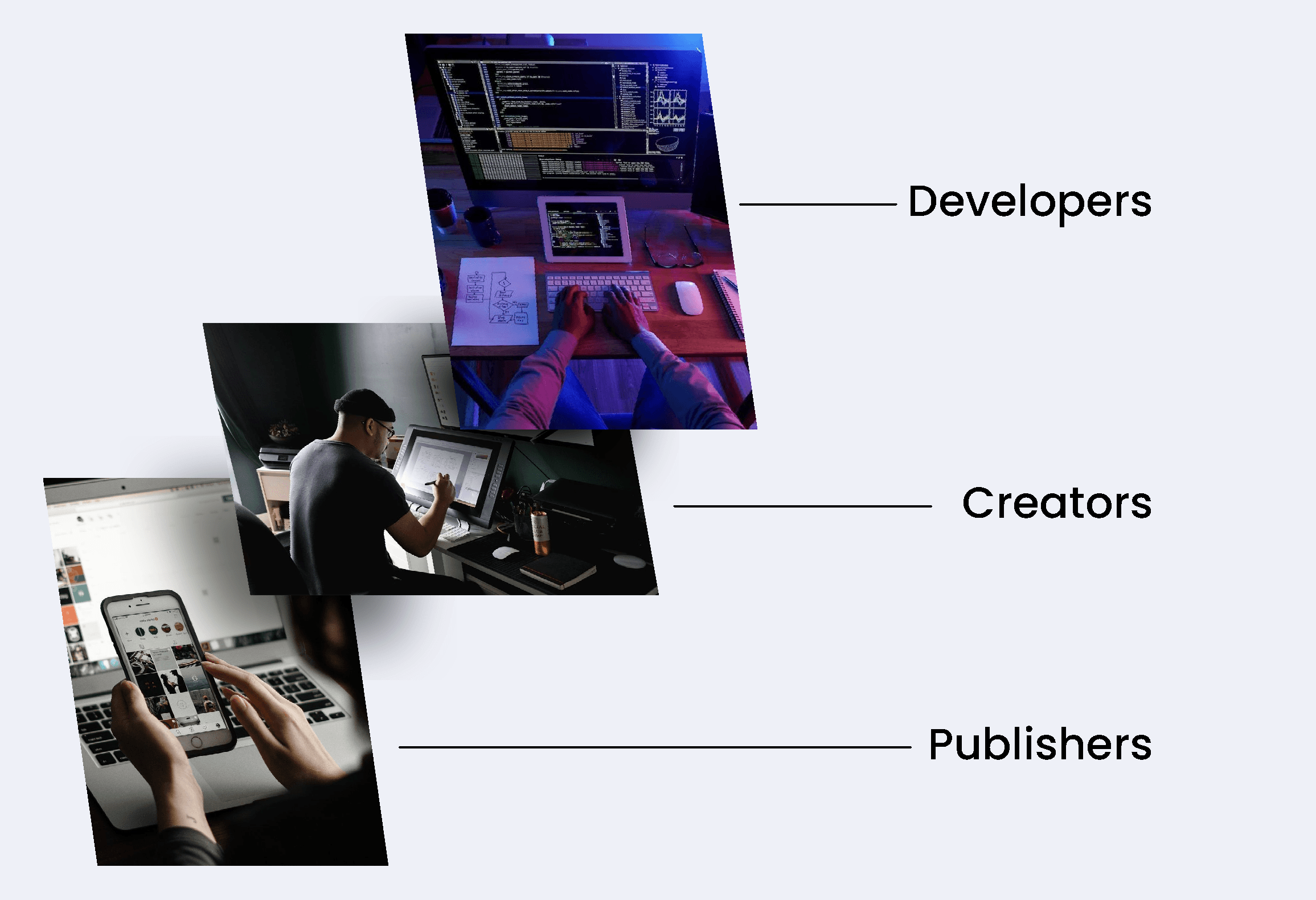

2. Roles and Actors

Understanding the various actors involved in synthetic media is critical for creating effective regulatory and operational frameworks.

Developers

These are the builders of synthetic media technology and infrastructure. They include for instance:

B2B and B2C toolmakers

Open-source developers

Academic researchers

Creators

Individuals and organizations producing synthetic media range from:

Large-scale B2B content producers

Hobbyists, artists, and influencers

Civil society members, such as activists and satirists

Publishers

Entities distributing synthetic media fall into two categories:

Institutions with editorial oversight (e.g., media outlets, broadcasters): These organizations host and create first-party content, often editorially managing synthetic media.

Online platforms with passive hosting (e.g., social media platforms): These platforms display user-generated or third-party synthetic content.

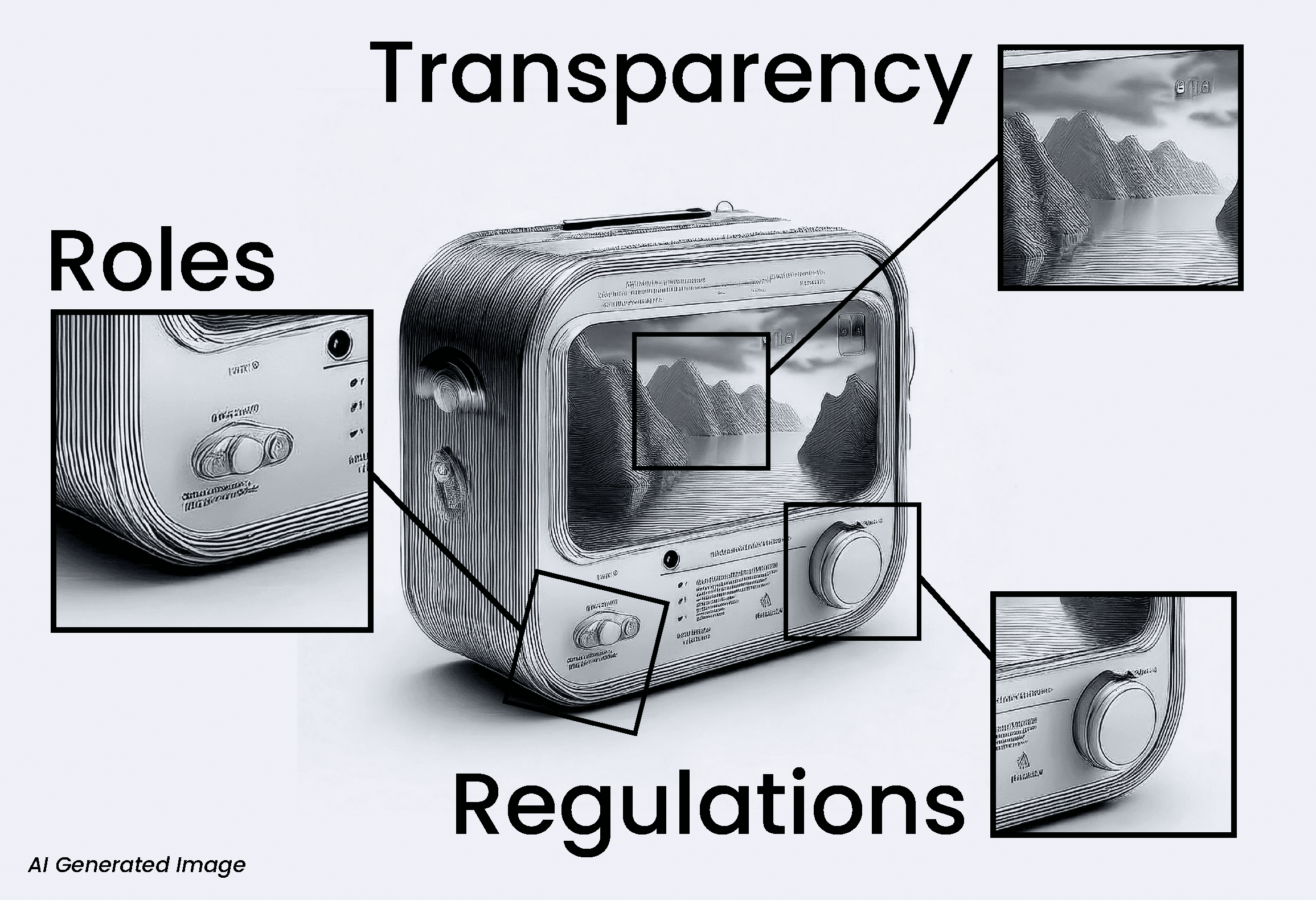

4. Mechanisms for Disclosure

Effective transparency requires a combination of direct and indirect disclosure methods.

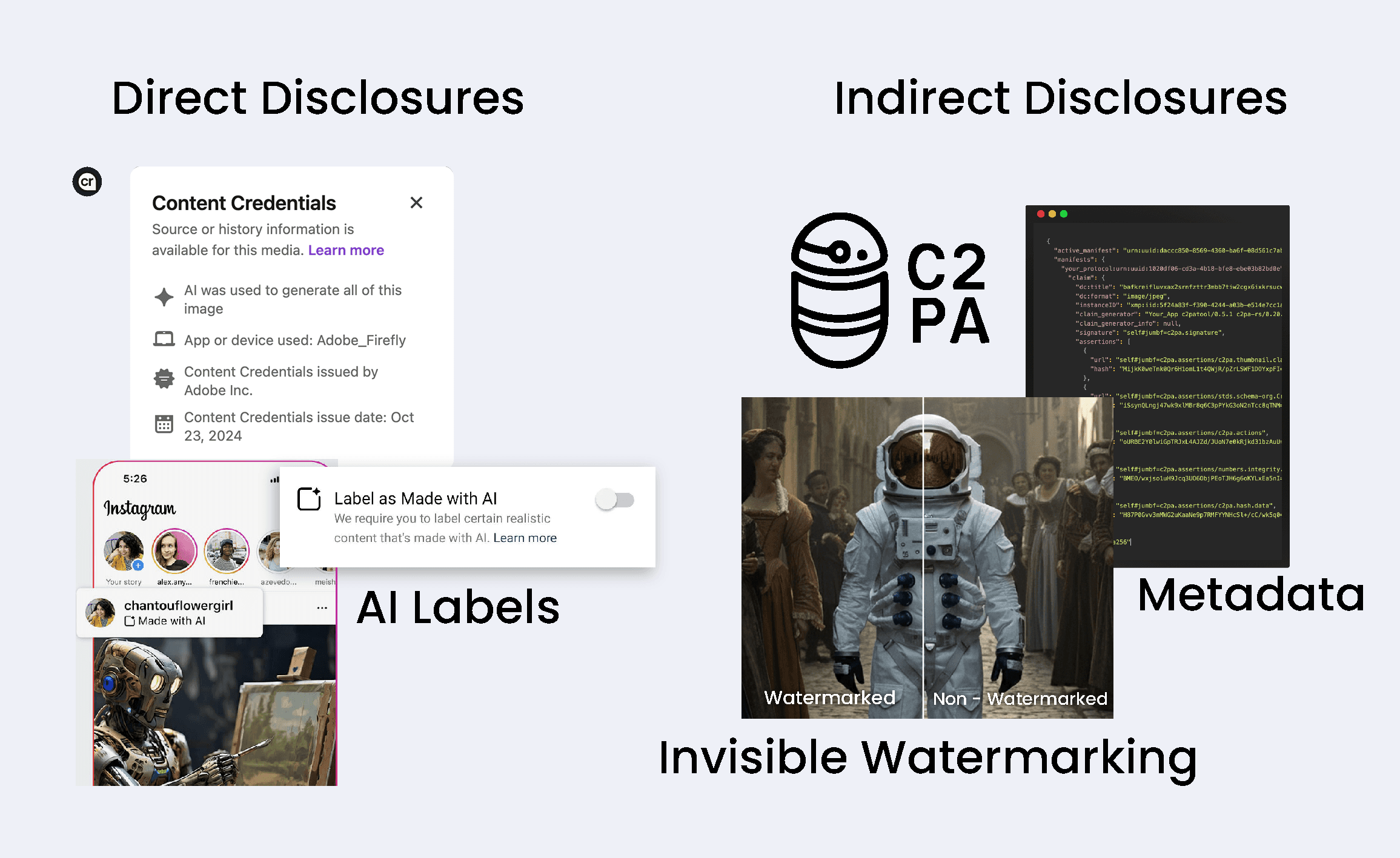

Direct Disclosures

Watermarks, Labels or Text clearly displayed on the media.

Audiovisual Disclosures in Videos and Audio files.

Indirect Disclosures (Machine-Readable)

Imperceptible watermarking: Embedded signals invisible to the human eye but detectable by technology.

C2PA (Coalition for Content Provenance and Authenticity): A standard for secure, machine-readable metadata that tracks content origin and modifications.

Regular metadata (IPTC): Information embedded within media files to describe its creation and transformation history.

Indirect Disclosures often provide the signals to direct disclose content for Publishers. E.g. C2PA metadata (indirect disclosure) can be recognised and validated by Publishers to trigger visible labels or Content Credentials (which are the visual representation of C2PA Metadata):

Conclusion

A holistic framework for synthetic media acknowledges the diversity of its use cases and stakeholders. By understanding the roles of developers, creators, and publishers and implementing robust transparency mechanisms, we can foster responsible innovation while safeguarding against misuse. Whether through visible watermarks or imperceptible metadata, effective disclosures are the key to maintaining trust in an increasingly synthetic world.